Ridding Waste Heat from Electronics

We currently have an almost 1600sqft home on slab. we have a 3ton HVAC unit in a spider system setup with one centralized return in a hallway near the two smaller bedrooms and 2nd bathroom.

I have some gaming PC’s that were set up in our bedroom, our bedroom would get way too hot after I did my recent upgrades for streaming as well.

With this being said, we moved my set up and the wifes into our spare bedroom and are using it as a studio. The temps on average are an extra10-15 degrees hottter in there.

The HVAC unit no matter what just cannot seem to keep up with this additional load unless it were to be in the main open area for the kitchen, dining room, and living room.

What is the best efficient way to fix this at the moment, this is a brand new home within the last 3 years.

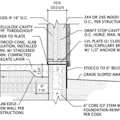

GBA Detail Library

A collection of one thousand construction details organized by climate and house part

Replies

How many watts does this equipment draw?

Could you reduce the heating load your equipment is generating?

Newer equipment is much more power efficient than older equipment.

Does this equipment really need to live in your home or the conditioned part of your home?

Could you move the return duct to the equipment room?

I expect Bill will have some better ideas before long.

Walta

Also, does that equipment need to be on all the time, or can it be powered down between gaming sessions?

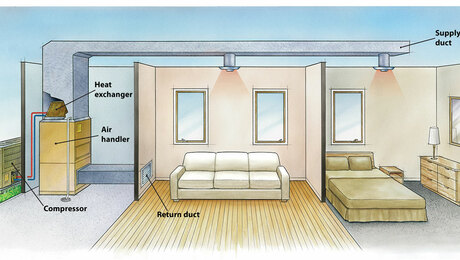

I suspect the issue is that the system with the central return can't exhaust the heat generated by that equipment quickly enough. It is generally easier and more efficient to deal with the heat near the source, so I'd recommend some powered ventillation on the return side. This could be a transfer duct arrangement between the room with the heat-generating equipment and the hallway, possibly with a few muffin fans in it to help exhaust that hot air. A 3 ton system would be able to remove about 10kw of heat, so unless your system is barely able to handle your home's cooling load, I would expect it to have some extra capacity to deal with a few hundred watts of computer equipment.

If you have enough equipment (I know some of those gaming systems get pretty involved) to generate a kilowatt or more of heat, you may want to consider a spot cooler (portable air conditioner), and set it up to cool the room with the equipment. This won't be as efficient, since typical small spot coolers usually exhaust their hot air along with a portion of the cooled air from the space they're cooling, but they're inexpensive to buy and install. Better would be a small mini split in the room with the equipment. I commonly spec 3/4 and 1 ton split systems for small network equipment rooms at work, where they are a good fit.

I'd try a transfer duct first and see if that is enough. You can make your own "transfer duct" arragement with two registers and a short section of duct -- or even some plywood -- to connect the hot room to the hallway. Muffin fans are readily available from places like Digikey. Note that the transfer duct will also carry sound, so screms of delight (or agony) from someone in the midst of an intense gaming sessions will now be much more audible in other areas of the house.

Bill

Walta100 and Charlie,

The equipment in general at a minimum is drawing roughly 2000-2500 watts total. One item must be on 24/7 for the time being until I figure out migration to the server via other methods and protocols. The one item that must be on 24/7 does ok in the room by itself (im cold in the AM when not doing much but then hot in the afternoons and evenings as I'm really in there. The one computer that needs to stay on in the future will also be moved up in power as well and alone by itself will consumer about 1500 watts maybe more as I need more processing power for sound and video.

I had thought about using my server to do most of this stuff and remoting in, in some way shape or form but even remote desktop lags across ethernet... do I throw 50' display port in the wall then? I dunno haha. I have started looking into undervolting my desktops to help while keeping performance the same however.

The other desktop is the wifes gaming PC which will be on and off when needed and will eventually be pushing about 1000-1500 watts when in heavy use with me while also blending at a minimum 1080P streams.

With the server, it is heavily powered and is in the garage networked in. there is no video card however, in a few months, it will support a GTX1080 as I move from 3080ti to either 3090ti or 4090ti and increase ram another 16-32GB on my studio desktop.

when all the lighting automation for the studio moves to the server (security concerns temporarily as I'm not sure which route to go enterprise wise), the studio PC will be able to be shut down when not in use. I also run water cooling across the board in the room which I believe increases heat load quicker within the room if I am not mistaken. I have had some HVAC training but not much so I am sorry as I only did it residential for a few years.

Bill,

I have a feeling this truly may be the case and is something I have thought hard about with moving the unit into the attic and installing returns in each room on top of the central return while also downsizing it. We have also thought about adding an energy recovery ventilator as well as I believe we are negatively pressured and will need to remove more in the kitchen (smells and grease issues so grease trap possible), as well as the MBR bathroom (humidity issues with condensate dripping from vents with shower on and 5" exhaust fan going too.

I have very very much thought about transfer ducts into the attic above the doors to each room needed. With that being said haha, How do I mitigate sound being I have an indoor studio until I can afford to decouple walls, add mass loadedvinyl, etc..?

The spot cooler has also been a massive consideration. I think I would be perfect at an additional i'd say 2500-5000 btu including additions as I move forward as well but I would also like to fix the issue in general across the home too for future endeavors instead of a quick fix. We have honestly been thinking about doing minisplit across the home to control each room temp wise and then just adding in returns to the rooms to mix air and clean/purify.. would this sound ideal and efficient from the cost standpoint on a monthly basis?

We definitely hit higher than 10KW of heat load for sure across the entirety of the home. I have started adding insulation where it has been compacted from cable installs as well although it's a little by little thing with money atm. I have also been sealing the walls from attic penetrations with foam and have been thinking of insulating can lights above them via a cover and foam to seal. I have also thought of adding in reflective insulation for the attic along the roof to mitigate heat transfer from the solar panels to roof and roof to attic as well. Is this logical as we move forward while sealing? any alternatives instead?

The muffin fans is an amazing idea as well, any help with sound transfer would be greatly appreciated too though. the fan will muffle a lot per se but i also need good isolation. I may just try this though and play with it. would filtering it help reduce sound but not kill the fan with maybe a light mesh filter to help bounce sound waves (similar to mini split plastic filters?) Plywood would definitely help too haha Thanks for adding the screams of joy or agony haha. that got me laughing and could definitely be a concern haha.

Thanks for the help to both of you! I know this is a fuck ton and you may not be invested to reply but I do look forward to it!

Tim

An electrical load of 2500 watts is equivalent to about 8500 BTU/hr. For a 1600 SF house built to the latest energy codes that could conceivably be the entire cooling load most if not all of the time. So basically you're taking the cooling load of the house, doubling it, and putting it all in one room.

You've got enough cooling capacity with a 3-ton AC. There's really two challenges: directing enough of the cooling into the studio so that it is adequately cooled and the rest of the house isn't over-cooled, and getting the whole thing to be adequately controlled by a thermostat.

I think the ideal solution would be to split your duct system into two branches and control each with a separate thermostat and damper valves. I'd imagine with a 3-ton system you'd have 18-inch or 16-inch trunks and then 5 or 6 inch ducts going into the rooms? For the studio itself you probably need to replace the existing duct with a 12" or so duct and add an equal-sized return. So you'd need to put in a wye like this: https://www.theductshop.com/shop/16x16x12-wye-p-139.html

And then a 12" motorized damper on the branch that goes into the studio:

https://www.homedepot.com/p/Suncourt-12-in-Motorized-Adjustable-Damper-Closed-ZC212/306734240

And a 16" motorized damper on the branch that goes into the rest of the house. Each damper is controlled by its own thermostat. If the ductwork is in unfinished space where you have access to it this is pretty straightforward, if not it means ripping open the house to run the ductwork. Depending on your air handler you may also need to put in a bypass to prevent too much static pressure in the ductwork when only one zone is open.

As I hope you see this is a pretty big undertaking. It may be DIY, but it's advanced DIY. You're really better off trying to get the heat loads out of the living space, or even better distributed in the living space.

My guess is you're computer equipment is probably not using as much power as you think it is. If you have two systems using around 2,000-2,500 watts total, you'll probably trip a typical residential 15A electrical circuit after several hours to a few days of operating, since a typical residential circuit is only capable of around 1,800 watts, and you probably have a little more on there than just your computer equipment.

I probably have a bit of a unique perspective here since, which Walta kind of alluded to, I design datacenter facilities as a day job -- I basically build "houses" to hold computers, usually thousands of computer servers. While some servers do guzzle down power, most are in the "several hundred watts" range, probably around 400-500watts on average in terms of power consumption, and that would be with a good size disk array. Chances are your machines are probably using something very much less than their power supply's rated capacities, especially when they're not actively doing heavy video rendering (such as in a complex game). A server especially, assuming it's dedicated to server duties, would normally not have any fancy video card at all, since it wouldn't normally be doing any video activity at all. VMware, for example, just shows a text mode login screen most of the time.

I would double check those power consumption numbers before you get too far along with a cooling system plan. I mention this in an effort to try to avoid you overbuilding your cooling system, which will not only mean wasted money, but will also result in less effective cooling. Get yourself a Kill-A-Watt meter, and use that to measure your AVERAGE power consumption over a few hours. You'll want to do this in two situations: one during a heavy gaming session, where the video card is hard at work and drawing a lot of power. The other measurement should be made with the systems relatively idle. Now you need to estimate how long you would be doing that serious gaming. If "serious gaming time" is only an hour or so, you might now want to size your cooling system for that load, and allow the room to creep up in temperature as you're gaming. That's your call to make though. You MUST size your cooling system to handle the IDLE time load though.

The general rule is that all the power that goes into a computer comes out as heat. This is the basic starting point I use to lay out everything in a datacenter (square footage, cooling tonnage, even electric service needs for the building are determined by this). In large facilities, we work this out as a "watts per square foot" number, which in modern mid-density facilities these days is around 135-190 watts per square foot. Some facilities are much higher than that, but those are fancy, and very expensive, to build.

If you find you really are around 2,000-2,500 watts or so of AVERAGE power consumption (peaks of a few minutes or less don't really matter much in terms of cooling load), then I'd first make sure to put in at least one more electrical circuit into your room, to make sure you're not doing any long-term small-overloads on your normal circuit, which is a dangerous condition -- you don't want to operate in an overload condition that isn't enough of an overload to actually trip a circuit breaker. Electric code actually specifies that in "continuous" service, which is defined as 3 hours or ore, you cannot load ANY normal electrical circuit to more than 80% of it's rating, which is normally the rating of the circuit breaker suppling the circuit. Once you've done that, you take care of the safety issue, which should be your first priority. I'd run a 20amp circtuit, to give yourself some extra room for future upgrades. I'd probably run two or three such circuits too, just to make sure you're covered, since it doesn't cost much more to add some extras now compared to what it would cost to add one more later.

Now you get to the actual COOLING part. One ton of cooling, in theory, can remove about 3.4kw of heat from a room. We only care about sensible cooling for heat from computer equipment. That means you need one ton of cooling (approx) to handle your ~2,500 watts of load, since you need to also handle a little thermal gain from the walls on a hot day. You can get minisplits in this range no problem, so that would be your easiest option. I've done plenty of designs for small equipment rooms cooled exactly this way.

You have one other wrinkle though. Datacenter cooling differs from normal cooling design in one more big way besides sheer scale: in a datacenter, the AIRFLOW part NEVER stops. All the cooling units run the blower CONTINUOUSLY, since you have to remove heat from hot spots (the servers) constantly. Only the compressors cycle on and off to control the temperature, the blower does NOT cycle. There are some vancy variable speed units now that also control air pressure, but that's too much of a tangent for this thread. For a minisplit, you want to configure it to constantly run the fan. You might need some additional fans (I jokingly refer to this as "additional fannage") to help move heat from the hot spots, since you probably don't have a raised floor, and the minisplit alone probably won't have the airflow capacity you'd need to handle a larger room in this situation. That will need some experimentation though -- don't put in extra fans before you know you really need them.

Transfer ducts will help, but probably won't be enough if you really have the load levels you think you do. If you end up in an AVERAGE load of around 500-600 watts or so, then try the transfer duct. I wouldn't actually start cutting any holes until you verify your power consumption though.

BTW, typical building design cooling load for ONE person is about 100 watts. That will give you some perspective. 2,000 watts of server load is the equivalent of having 20 people in that room with you.

Bill

What I meant with the wattage was what they were capable of handling psu wise. Let me actually run some calculations. My 4k-8k video processing gets extremely intensive which is when most of the heat is unbearable. When I throw in games doing similar is when I am having issues as well. During music processing, I am pretty good temp wise and often get cold. With both desktops doing this and me streaming on top of it, it really gets toasty in here, last temp I took was about 94 degrees. During this however, the door and window does need to shut as I do need soundproofing. I am going to sit down and reconnect some of our devices (network issues recently with a reset) and see what I am truly pulling for wattage though. Let me get back to both of you when I do some decent sessions over the next day or so and report back.

Your guys help has been awesome so far!

What about the idea of getting the equiment out of the conditioned part of the house and letting it live in say the garage and you operate the equipment from a terminal in the house?

In a few weeks some of us will be turning on our heating equipment. Seems like this equipment will almost heat your house.

Walta

I forgot to mention earlier: don't bother with MLV sheet for soundproofing, there are much better ways to soundproof a room. Depending on what you need, a second layer of drywall might be enough, or you might need to go all the way up to a double stud wall. Somewhere in between is hanging drywall on resilient channel. MLV between drywall layers will be only marginally better than a double layer of drywall alone.

I'd expect your heaviest load time would be doing video processing, especially game-style 3D rendering. You really need to measure power consumption with something like a Kill-A-Watt meter that will get you a reasonably accurate reading. From the kw consumption of the equipment, you can calculate the heat (and cooling) loads easily, and accurately.

BTW, Walta: I don't think the OP every mentioned a climate zone, but if he's in an area that will need heating in the winter, the computer equipment will likely contribute a good amount of heat towards his home's heating needs. The commercial datacenters I work with at work don't ever run heat -- they run cooling year round, just needing a little less cooling in the winter. I've put a lot of effort into designing economizer systems for those facilities to minimize how much they have to run the cooling equipment in the winter -- the goal is to run only the air handlers for air circulation, and minimize the amount of energy needed for the actual "making cold" part as much as possible.

Bill

right now just at idle alone left on I am pulling 2.03kw on desktop and its averaging about 82 or so watts. while typing. I have a Kill-A-watt meter on the way at this time though. At the moment, I am using a kasa strip to get a general idea.

As for getting the devices out of the room, I haven't really thought about that with the things in here. We have the server we dumped into the garage that we are getting ready to use a firewall, etc.. I definitely don't want to do terminal over Ethernet like RDP. Is there something else you had in mind? Running Display Port cables through the wall sounds a little meh haha.

I totally forgot my climate zone, I am in Colorado. The summers usually hit about 90-100 and stay above 100 full sun most of the summer. Winter/fall starts in about September. We start our first snow usually around this time but we don't get a lot. During winter we hover between 20 and 40 degrees mostly. Occasionally we will drop down to 0 or 10 but it isn't for very long. Any snow we get is almost certainly gone the next day. We are high Desert here essentially.

I'll get a general idea on processing today when I max out my GPU. I may get limited though so we will see. I will have to check the wifes more importantly. Hers is my older GPU and that one for what it is gets really toasty. On average the Temp for it rode 80 degrees C. My 3080GTX Ti gets hot but at times, I feel like its no where near that one.

We do run cooling most of the time and after that a good portion of the time, the Unit stays off as we open up the North side windows and pull the cold air in as we can and trapping it in the mornings. A huge portion of that time we can just leave everything open. I had wanted to run our air handler 24/7 it does use a good amount of energy staying on. I almost think it saves more energy with the starts and stops off of what I have seen via the graphs for our energy monitoring via 3 sources. From what I see we sit in the higher 25% range at the moment for efficiency in our area but the accuracy of that is probably moot. when I kick on the fan to run 24/7 though, it pushes us down towards the top 50% Maybe something is wrong??

Regarding running your servers and desktop in another room/garage -- it's absolutely possible to do so with a fully "in person" experience (not at all remote).

There are two general ways. The first, if you share a wall with your garage or are at least within 15ft, is to simply get longer cables and run them from one room to another. This is very distance dependent, though.

The second is a notably more expensive but will work over much farther distances and that's to get something like an active thunderbolt 3 dock and some optical thunderbolt cables to make your connections. Those have a range of closer to 75ft, if I remember correctly, and can trivially carry raw video, audio, usb, etc over those distances. This is the solution that Linus Sebastian of "Linus Tech Tips" did -- his (very high powered) desktops are all rack mounted in his basement but he accesses them as if they were under his desk.

Yes, both of those require pulling cables through walls. I'm not sure why that would sound 'meh', though -- running cables through walls is one of the primary tasks of any wall.

Bill,

We had also thought of an ERV. The house is too tight in my opinion as well and we are negatively pressured. We have zero return to the outside in general at the moment and still had plans for another exhaust fan in the MBR bathroom for moisture issues until we knock down the wall causing the issue. Along with this, we still have plans for a kitchen hood with a good grease trap, etc..

I am going to kick the fan on 24/7 after I measure this and see where we go temporarily. the little girl has complained of being cold at night as well which we are on the tail end of the hot season, its just slightly too soon to flip to heat.

I don't think any ERV is going to be able to keep up with your equipment's heat load. Once you get your Kill-A-Watt meter and have some good measurements, post back here and I can give you a better idea what you might need to do.

I have some familiarity with your climate since I have family in Aurora near Parker. Cool nights, and very dry all the time. You might try putting things like your server in a garage. You won't really need to worry about server things freezing as long as they have some shelter, since they produce their own heat. What you DO need to worry about is condensation, but that shouldn't be much of an issue in your climate zone. Some of the big crypto coin mining places just use outside air exchange instead of real "cooling". I've even designed a few of those places. It's basically a box (warehouse) with a big vent and air filter on one end, and lots of fans on the other blowing out. Pretty simple. You could do the same for your server and network stuff easily enough, since you wouldn't have issues with video for those and could use a remote terminal.

For your gaming stuff, you have video signals to think about, and remote desktop or the like isn't really any option. You could potentially run HDMI or display port cables through the wall, but there are distance limitations for those so you can't go very far without bumping up to some fancier technologies (I can tell you from experience the fiber optic HDMI cables work very well, for example, but they are expensive). If you get at least get the server out of the indoor space, you've moved SOME of the heat out.

Once you have measurements on your other machines let me know and I can give you some other ideas.

Bill

I have some familiarity with your climate since I have family in Aurora near Parker. Cool nights, and very dry all the time. https://ccrypto30x.com/