Inductive cooktops and peak electric loads

I’ve been reading Dana’s explanations at GBA that peak electric loads are the primary reason for the total electricity capacity required of any given utility. I understand that devices like electric point of use water heaters will easily drive higher those peak electricity requirements for the grid infrastructure. What about inductive cooktops? My understanding, which is limited, is that they have a much higher peak electricity requirement than standard electric ranges. I’d really like to install one during my remodel because they are much more efficient than standard ranges and heat things faster. But I won’t go there if it has a similar effect as a point of use water heater. Does anyone know the details about peak electricity consumption of these devices vs. a standard electric range?

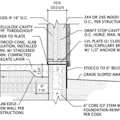

GBA Detail Library

A collection of one thousand construction details organized by climate and house part

Replies

Eric,

As far as I know, the power draw of an induction cooktop is less, not more, than the power draw of an electric resistance cooktop.

Used in the same way, for the same tasks, the peak loads from an inductive stove will be similar to or less than a standard electric stove.

Induction cooktops seem like they have higher peak draws because their high prices mean they get high end features - like 12000 BTU burners, competitive with a lot of gas stoves. High end electric radiant stoves can come pretty close to the same peak output, with 3000 watt (10,200 BTU) elements.

For most cooking, cranking it up is just going to badly burn your food, so it doesn't matter; it mostly just comes in to play when you're boiling water... if you don't want the higher peak draw, just leave the burner a bit lower and wait longer for your water to boil.

Thanks to both of you. It sounds like there is an inductive cooktop in my future.

We are replacing our standard electric stove with induction. Do bit of googling and checking out the specs. Induction cooks differently and therefore is much more efficient than old school electric or gas.

Aggregate peak demand is what matters. If everyone with an electric cooktop changed to induction, total demand at dinner time would be lower because it takes fewer kwh to cook something using induction.

And there are other ways to reduce aggregate peak demand for cooking ... for example insulated cookers

http://www.lowtechmagazine.com/2014/07/cooking-pot-insulation-key-to-sustainable-cooking.html

When demand charges are assessed on commercial & industrial customers it's typically on the heaviest use hour or half hour during the billing period, but in some cases it's as little as the heaviest quarter-hour.

A typical induction burner draws 1500-3000 watts when cranked to the max. While you might draw that much per-burner when bringing something up to temp, most longer-time cooking is at much lower power levels. While you theoretically might be able to crank an induction cooktop up to 12,000 or even 15,000 watts by cranking them all to the max that's not how people really use them.

There has been discussion about installing smaller local batteries on induction cooktops to limit peak power draws, but SFAIK no commercial products exist (yet.) If demand charges become a standard practice in residential rate structures it's not a huge leap of technology or cost to get there.

Standard electric ranges waste a double-digit percentage of the power in heating up the air under & around the pot, and the thermal mass of the burner itself. Induction ranges are definitely more efficient, bringing the pot/pan up to temp more quickly, and requiring less power to maintain the temp but it's incremental, not a large cut.

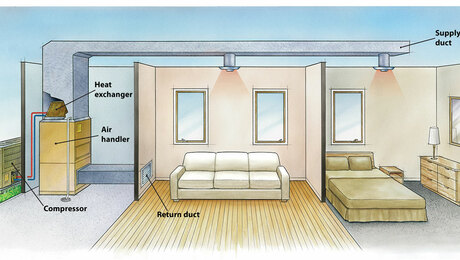

The half-hourly draw of an induction cooktop the way most people use them isn't huge, probably less than 2000 watts averaged over the half-hour, or 1200 watts over a whole hour for long simmering meals, perhaps with 5 minute spikes as high as 10,000 watts. While significant, it's pretty small in comparison to running an 5-8 ton air conditioner for a half-hour, or taking a 15 minute shower with an electric tankless hot water heater drawing 15,000- 24,000 watts the whole time, and probably smaller than the 2500-3500 watts of an electric clothes dryer.

The nightmare scenario from a grid-abuse point of view is the family that gets home from work at 6PM, plugs the electric car into the non-grid-aware car charger, cranks on the AC, puts dinner in the oven then runs load of towels in the dryer while they take back-to-back showers with the tankless, all occuring during the period when PV exports to the grid are fading fast. This is what creates the huge ramp-rate in grid draw that the CA-ISO people have dubbed the "duck curve", and other grid operators have refered to as "nessie" (after the Loch Ness monster.)

http://www.greentechmedia.com/articles/read/retired-cpuc-commissioner-takes-aim-at-caisos-duck-curve

Well, I guess this finally puts the kibosh on my habit of using my arc welder to broil (burn) steaks during those times when my barbecue runs out of propane. At least I will no longer get the suspicious looks from neighbors when I put on the eye shield.

Dana,

I saw an interview with a utility manager in Britain who recounted that each week they monitor the popular television series Eastenders, and when an episode finishes they start up a generating station across the channel in Calais, to cover the demand as viewers put their kettles on for tea.

There are well known short term grid load spikes in the Netherlands surrounding the fact that EVERYBODY sits down to eat dinner at 6PM, not 6:01 (I think it's written into the Dutch constitution! :-) ) Local gas peakers (or sometimes imports from Germany, which has massive grid connectivity to NL) manage those loads.

It's odd that the nearest/cheapest peaker to manage predictable blips in grid load in the UK would be in Calais, given how little cross-channel grid capacity there is. I'm suspicious of the absolute veracity of his statement, even if peak grid power is imported from Calais from time to time. The UK grid is not synchronized to the Western European grid (even though it's the same nominal frequency), so imported power from France is via the only existing DC transmission line to France , which has substantially less capacity than the only other HVDC link to the Netherlands, completed a handful of years ago. (Why France, and not NL?) The guy had to be just blowing smoke.

On most days/weeks domestic peaker power in the UK would be much cheaper than contracting with low capacity factor peakers on the other side of the rill, due to the high transmission costs and transmission efficiency/loss issues from the intervening converter stations. There may have been some weeks where the Eastenders-timed peaks required importing that VERY expensive peak power, but I doubt it's every week or even most weeks. Maybe it happened exactly once, and it stuck in his memory because it seemed so nonsensical.

http://i.guim.co.uk/static/w-620/h--/q-95/sys-images/Environment/Pix/columnists/2011/4/12/1302607626657/Supergrid-001.jpg

http://www2.nationalgrid.com/About-us/European-business-development/Interconnectors/france/

One thing they don't have much of in the UK is air conditioning power use peaks, but that may be changing over the coming decades, as the numbers of truly hot days rise, and air-to-air heat pumps become more commonplace.

Varying the output of wind farms has become an effective load-tracking tool for grid operators in the UK (and the US), at a much lower cost than a fossil fired peaker via a lossy transmission route. With any significant wind levels they can throttle up the baseload generators a bit and throttle back the wind a bit, and vary the wind output to track the load, to limit the amount of spinning reserves necessary to keep the grid stable. Taking a small hit in capacity factor on the wind resources is still a lot cheaper than operating low efficiency low capacity factor peakers.