How do deviations in average winter temperature affect energy consumption?

Off the top of my head I would expect a five percent colder winter to cost five percent more for heating, all things being equal.

But it seems to be more complicated than that in our experience. For example, if we start with a client’s modeled energy consumption for an average heating season, and winter turns out to be five percent warmer, we seem to find that the client consumes more energy than we we’d expect (more than five percent less.) Likewise, if we have five percent more heating degree days, clients seem to do better than we’d predict (they consume less than five percent more.)

Any guidance? If this is non-linear, is there a mathematical way to adjust modeled energy usage to account for different winter scenarios?

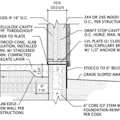

GBA Detail Library

A collection of one thousand construction details organized by climate and house part

Replies

The initial problem in considering your question is that it isn't clear what 5% colder really means. The various temperature scales, Fahrenheit, Celsius, Kelvin, are different and sort of arbitrary. Is 95 degrees the same per cent different from 100 in each scale? If we use degree days, don't we have the same problem? I'm stumped.

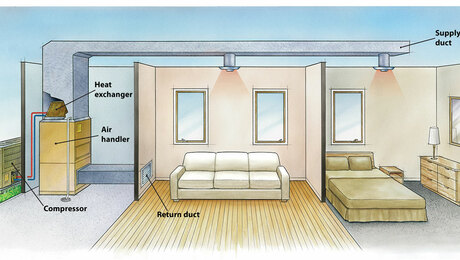

One issue is that the correct base temperature to use in the degree-day calculation is different in different buildings, depending on insulation level, parasitic heating, solar gain, and thermostat set point. Another issue is that heating is only one energy use--there may be others from the same fuel source, which might include cooking, clothes drying and water heating if it's natural gas. (Fewer if it's oil, more if it's electricity.)

Norm,

Welcome to the not-so-simple world of "weather normalization."

Plenty of academic papers have been written on the subject. It's not simple. Here are three resources to get you started:

Degree Days - Handle with Care!

An Energy Manager’s Intro to Weather Normalization of Utility Bills

A Comparison of Weather Normalization Techniques for Commercial Building Energy Use"

Martin, thanks for the proper terminology and the resources. These reports seem to show a completely linear relationship between energy intensity for heating and heating degree days. So we must be seeing some other variables clouding things up--behavior changes, base load changes etc.

Stephen--I put the question in HDD but I should have been clearer.

Charlie--Thanks, I was trying to ignore those other variables with "all things being equal."

Norm, Figs. 1.7-1.9 in Martin's second reference show a nonlinear relationship. HDDs with different base temperatures are similarly not linearly related.

Norm,

I gather:

- Your clients sound like residential types

- Clients are not necessarily doing their own tracking and you want to show year-over-year savings from unspecified projects you've provided

- The heating season is the focus of interest

- Electric usage is measured by a single meter. The HVAC is not independently metered. (I'm guessing).

- (You've not specified the fuel used for heating)

And,

1) You've asked a specific question about the linearity of energy usage for heating given either a warmer or colder winter than expected.

2) You want a model that more accurately predicts usage

What I'd consider:

(1) You're correct in expecting a linear response of heating energy usage versus temperature, at least for conduction and convection heat flows.

However, I'm curious how the calculation is performed. If you are multiplying the base energy by say 5% for a 5% colder winter, remember that only the HVAC usage should see this increase. If you are multiplying by the whole of the base, you're saying the TV uses 5% more too. That's possible for other reasons but the weather shouldn't cause that. In this example, the customer would use less than you expect. Similarly, if it were a warmer winter, your calculation using the whole of the base would mean the TV would be used 5% less. That's unlikely but the modeling would show the customer is using more than expected. This might not be your issue but it does fit what you've described.

(2) Modeling and comparing usage to predicted.

a) Whether a winter is colder or warmer than expected, cloud cover is related but also independent of air temperature. You could have a variation due to solar heat gain.

b) Humidity in the dwelling and thermostat settings play a key role and may be a source of variation.

c) If you can get it, it's useful to have a couple of years of data to define base usage. I like to look at electrical usage during months where there is little heating or cooling going on. This defines (roughly) the non-HVAC usage. I usually treat this as a constant from month to month. When I do tracking for my home, I use a spread sheet and make corrections for degree days. I've found it doesn't matter too much to me, however. I mainly look at year-over-year yearly or seasonal data. I know the basic heat transfer going on with my projects and so know that they have to work. For non-HVAC projects, I take the manufacturer's data. I take the longer view because I know there's a lot of variability in tracking usage from a single meter.

d) Rare is the project that affects only heating season. You may be able to document savings during cooling season too.

e) Beware of the "all things being equal" in the modeling. There are a lot of reasons why they may not be equal. Occupant behavior can be a huge source of variation but saying so can be a challenge in diplomacy.

f) I'd look for the simplest modeling that gives you and the client confidence that you're on track. A more complicated model might be perceived as smoke and mirrors to get a desired answer.

g) Consider having your model applied to a "known" case (maybe your house). That way you have an independent means of gauging your model and it's predictive abilities.